You would never think that I'll write about AI. But here we are.

I recently tried out NotebookLM. While I don't use such tools in my daily workflow, it is fascinating to see how far we've come with AI. Mozilla recently launched its own AI assistant called Orbit. It is geared towards summarizing web content.

On the surface level, I wonder why there are so many AI tools, geared just towards simplifying slop created by humans. Innumerous tools that serve the sole purpose of summarizing content.

But on a deeper level, I was questioning whether the said slop is actually created by humans. For one, we know that many websites are now using AI to automate writing of blog posts, articles, technical documents etc. There are AI tools that even create videos which eventually end up on YouTube. Even if the video is not entirely AI generated, the video often is created with a lot of inputs from an LLM: from the general script to dialogues, LLM are doing a lot for YouTube creators these days.

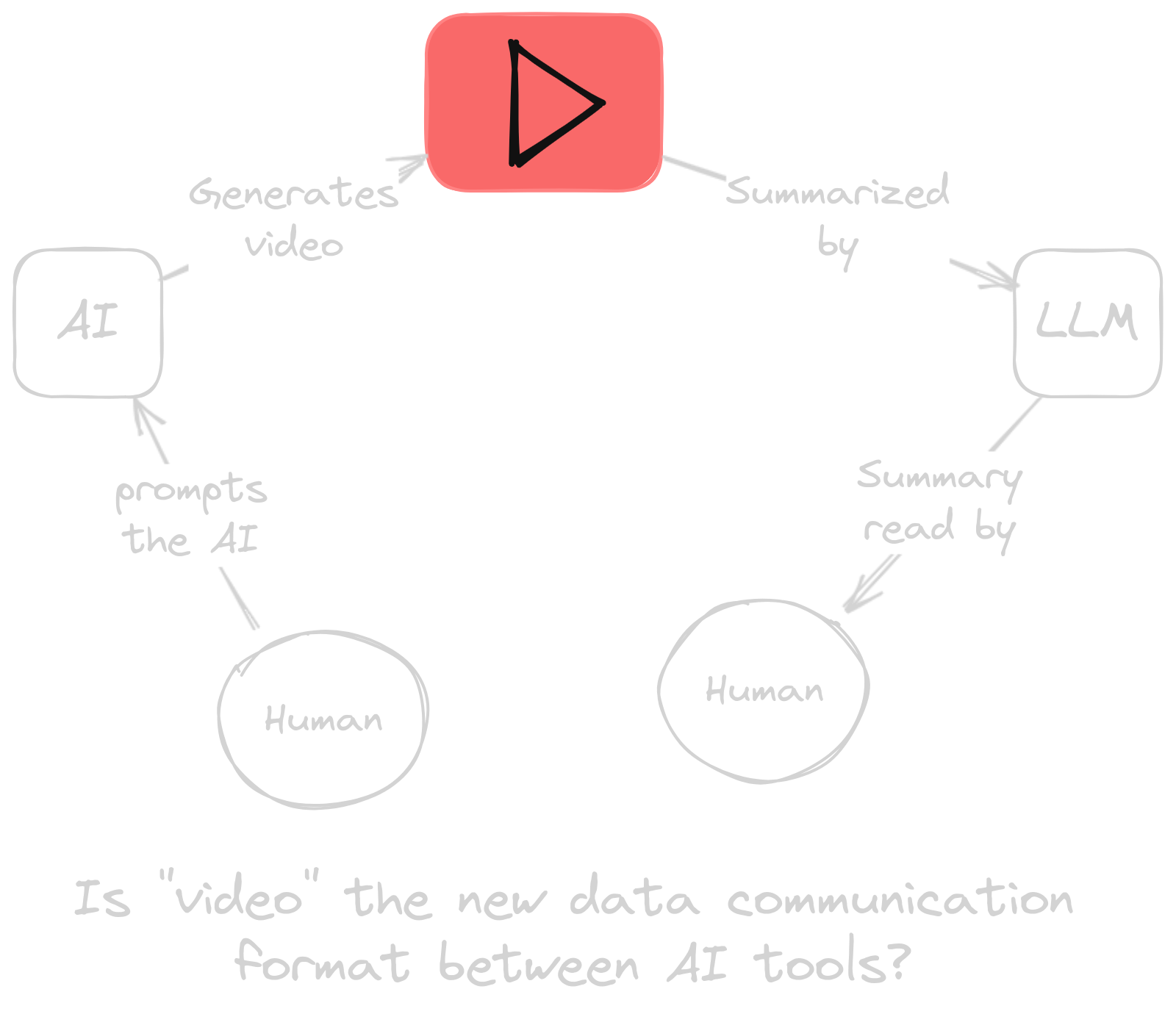

We're at a situation where AI is creating content and AI is consuming content. We, who pretend to be the consumer, are actually consuming the AI output, not the actual content itself. Take for example NotebookLM giving summary of a YouTube video. It is an AI tool, giving summary for you to easily digest, while not requiring you to watch the video in its entirety. Its possible, the video itself might've been composed and generated by an AI tool. In the near future, I predict this to be the norm rather than a mere possibility.

So what are the ends of the data? It is generated by AI and consumed by AI. In that case, is a YouTube video (precisely, the transcript) or a web blog a good communication medium between the two AI systems?

Application to application interaction has long been done via the concept of various Application Programming Interfaces (API). For example, web servers typically interact via REST APIs. In our case, two AI tools are interacting via a video format. Not exactly video yet, since NotebookLM uses transcripts (which is plaintext). But I'm pretty sure we'll soon have (or already have) a tool which can parse out information from a video.

In such a scenario, someone really ought to be working on a system for interoperability between different AI tools. A common data exchange format. Or a protocol or whatever. Extracting information from a medium meant for humans is usually not the most effective for computers.

I don't know where I am going with this one, but the fact that most of the internet is soon going to be bots is slightly concerning. Meta just now showed us how it wants social media to have fake bot profiles, sanctioned by Meta. The idea didn't get a good reception. But who knows, in the near future, it might be a commonplace to interact with bots on the social media. In the same backdrop, the Dead Internet Theory comes to mind.

With the huge amount of data that will run back and forth on the internet, wasting bandwidth communicating in a non-ideal format isn't a long term solution.